Apple has disclosed that its artificial intelligence models, integral to its new Apple Intelligence system, were trained on Google’s custom-designed chips. This surprising revelation was detailed in a technical paper published on Monday, highlighting the collaborative efforts between the two tech giants.

Alternatives to Nvidia’s Dominance

Google’s Tensor Processing Units (TPUs), initially created for internal workloads, have found broader adoption, including usage by Apple. This move signals a shift in the tech industry, with major companies like Apple exploring alternatives to Nvidia’s GPUs, which have dominated the AI training market. Nvidia’s GPUs have been in high demand, making them difficult to procure in sufficient quantities for large-scale AI projects.

Apple’s decision to utilize Google’s TPUs, specifically designed for efficient AI processing, demonstrates a strategic pivot. This collaboration points to a growing trend among tech companies seeking diversified and reliable AI training resources.

Details from Apple’s Technical Paper

In its technical paper, Apple outlined how it pre-trained its AI models on Google’s Cloud TPU clusters. The paper described the use of TPU v5p chips for on-device training and TPU v4 chips for server-side training, utilizing thousands of these processors working in unison. This setup allowed Apple to scale its AI training efficiently, covering various models and applications.

Apple’s use of Google’s TPUs underscores a pragmatic approach to AI development, ensuring that its models are trained on some of the most advanced hardware available. The paper did not directly name Google or Nvidia but highlighted the use of “Cloud TPU clusters.”

Future of Apple Intelligence

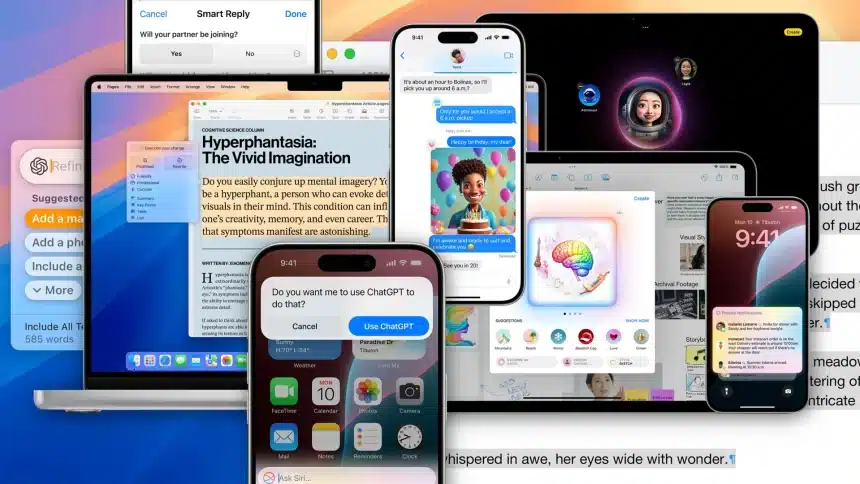

Apple Intelligence, introduced alongside this announcement, includes several AI-driven features such as an updated Siri, enhanced natural language processing, and AI-generated summaries. Over the next year, Apple plans to roll out additional features based on generative AI, including image and emoji generation, as well as an upgraded Siri capable of accessing and acting on users’ personal information within apps.

This marks a significant step for Apple, which has been more cautious than its peers in publicly embracing generative AI technologies. The collaboration with Google positions Apple to leverage advanced AI capabilities while managing the high costs and logistical challenges associated with AI training.

Apple’s collaboration with Google to train its AI models on TPUs marks a pivotal moment in the tech industry’s ongoing AI development. By diversifying its AI training resources, Apple aims to enhance its AI capabilities while maintaining efficiency and scalability. This strategic move highlights the importance of innovation and collaboration in the rapidly evolving field of artificial intelligence.